Introduction

In recent years, the importance of using secure private messaging applications for communication has come to the forefront of public attention. It’s not uncommon to find news articles written almost weekly on user privacy concerns and what the more tech savvy can do to limit their exposure to interception and monitoring from external parties.

One particularly interesting website is the Electronic Frontier Foundation (EFF) secure messaging scorecard, which aims to assist users in choosing “Which apps and tools actually keep your messages safe”.

This type of score card drastically simplifies the problem domain, and leads one to question what the tradeoffs are when installing an application from the list. While the advocacy of privacy based communication is something we love to see reach a mainstream audience, we believe the scorecard misses many considerations and metrics that are critical to the discussion.

As is commonly understood by those in the security community it’s a very hard problem to quantify, and it’s important to consider how the more functionality an application has, the higher degree of risk it poses due to an increase in attack surface. This is amplified when using an application which has not undergone the same rigorous testing of time and usage that more popular (yet less “privacy conscious”) applications have.

This blog post is the first in a series, in which we review the documentation and source code of a subset of applications in the EFF scorecard to understand their privacy versus security tradeoffs.

This is a subtle and often overlooked difference - as passive monitoring may be disrupted with the use of encrypted communications, but attacks against software vulnerabilities can negate the advantage of using those IM applications in the first place. We believe sharing this perspective is important to assist users in deciding on the right balance between privacy and security.

RetroShare

To begin the discussion, we chose to perform a code review of Retroshare. This application enables users to create encrypted connections to friends over a decentralized network. It supports features such as Chat, Voice and Video, Mail, File Sharing, Forums, Channels and Tor.

The application itself was chosen from the scorecard in a semi-random fashion, namely it received top marks in all columns except for “Has there been any recent code audit?” which peaked our curiosity.

We placed several constraints on ourselves for the purpose of the review, hoping the results could be extrapolated to form an opinion on the overall code quality of the application:

-

The focus of reviews will be on implementation vulnerabilities and not design level issues in cryptography or architecture,

-

The reviews themselves will be time constrained to up to 24 hours of effort

-

Any identified vulnerabilities will be reported following responsible disclosure practices.

Review process

The review was executed over 24 hours (spanning a few weeks), performing manual code review and proof-of-concept development for select findings. The table below is a general run-through of our activities during this timeframe:

| Hour | Notes |

|---|---|

| +1 | Action: Review the applications on the EFF scorecard. Select first candidate based on check boxes. Action: Visit the application website, review its intended user base / functionality. Download the source code. Action: Review the websites user and developer documentation. Outcome: The documentation appeared to provide a nice roadmap that can assist in development, however is mostly lacking in content. As a result, it wasn’t useful in understanding the codebase and was mostly ignored. |

| +2 | Action: Compile the application with full debug support. Action: Load the application into a code review tool (Source Insight) Review: Survey the application dependencies and third party libraries in use Outcome: A couple of statically compiled external dependencies (e.g. libgpg-sdk, libpegmarkdown) which can pose problems for patch management. |

| +3-5 | Review: Audit for implementation specific vulnerabilities Outcome: Several findings were identified that range across target components and vulnerability classes |

| +6-7 | Action: Created two virtual machines (“attacker” and “victim”) to perform runtime analysis. Action: Send messages between clients running under a debugger and trace data flow. Outcome: The project was very easy to setup for debugging and testing |

| +8 | Action: Have a cold iced tea and watch an episode of It’s Always Sunny in Philadelphia Outcome: lol’d, feeling refreshed. |

| +9 | Action: Review the distribution binaries, ensuring compile time hardening options are enabled by default Outcome: The Windows binary appears to be cross-compiled with gcc toolchain. The binaries are missing several useful compile time mitigations. |

| +10-12 | Action: Validated a selection of findings from the source code review to ensure reachability Outcome: Developed some basic proof-of-concept exploits |

| +13-16 | Action: Commence threat modelling of components to aid in code review coverage Outcome: Identified additional attack surface by reviewing data flows and sub-components |

| +17-21 | Action: Typed up an advisory and sent to developers Outcome: Reply within 24 hours |

| +22 | Action: Found Travis configuration indicating the project makes use of the open source Coverity static analysis scanner. The scan results are available here. Outcome: Surprisingly, a large number of our issues were not identified in the static analysis scan results. The vulnerabilities identified by the scanner did not present a compelling summary of the types of vulnerabilities identified with manual code review. |

| +23-24 | Action: Eat, bathe, and start blog post draft. Outcome: Smell better, look better, feel better. |

Key outcomes

-

Code attack surface: The RetroShare codebase is feature-rich and presents considerable attack surface across application components. This creates some complexity in scoping such a quick code review and determining where to focus our efforts first. As an example, we could focus on subtle low level vulnerabilities such as memory corruption, or alternatively focus on high level constructs such as HTTP protocol handling and potential logic issues. No particular restrictions were placed to this end, instead attention was paid towards quickly surveying for exploitable issues.

-

Systemic issues: At first glance, it was clear the codebase suffered from systemic “insecure coding practice” issues such as inconsistent return value checking and error handling, poor usage of explicit and implicit typecasting, and relaxed handling of adverse security edge-cases. While it’s an indication of the codes overall lack of maturity, these issues were mostly glossed over in favor of investigating attacks close to the surface and exploitable in our timeframe.

-

PoC development: During the review we developed a few proof of concept exploits to illustrate the impact of identified issues, and solidify our understanding of the attack vectors. However given time restrictions we had to be selective, choosing to write PoC for findings that wouldn’t eat into our review time unnecessarily. The findings ranged from weak binary protections, out of bound memory reads and remote memory corruption, to various web-like vulnerabilities. Below are two such examples of these findings:

-

The following code snippet illustrates a remote int overflow/heap corruption in VOIP video decoding (no PoC)

Chunk: comes off the wire, data and size are arbitrary. Image: becomes the rendered image

…

#define AV_INPUT_BUFFER_PADDING_SIZE 32

…

bool FFmpegVideo::decodeData(const RsVOIPDataChunk& chunk,QImage& image)

{

#ifdef DEBUG_MPEG_VIDEO

std::cerr << "Decoding data of size " << chunk.size << std::endl;

std::cerr << "Allocating new buffer of size " << chunk.size - HEADER_SIZE << std::endl;

#endif

uint32_t s = chunk.size - HEADER_SIZE ; <- [1]: s in range MAX_UINT-28..MAX_UINT

#if defined(__MINGW32__)

unsigned char *tmp = (unsigned char*)_aligned_malloc(s + AV_INPUT_BUFFER_PADDING_SIZE, 16) ; <- [2]: s arithmetic overflow to ~0 sized alloc

#else

unsigned char *tmp = (unsigned char*)memalign(16, s + AV_INPUT_BUFFER_PADDING_SIZE) ; <- [2]: s arithmetic overflow to ~0 sized alloc

#endif //MINGW

if (tmp == NULL) {

std::cerr << "FFmpegVideo::decodeData() Unable to allocate new buffer of size " << s << std::endl;

return false;

}

/* copy chunk data without header to new buffer */

memcpy(tmp, &((unsigned char*)chunk.data)[HEADER_SIZE], s); <- [3]: memcpy using s, leading to memory corruption of tmp

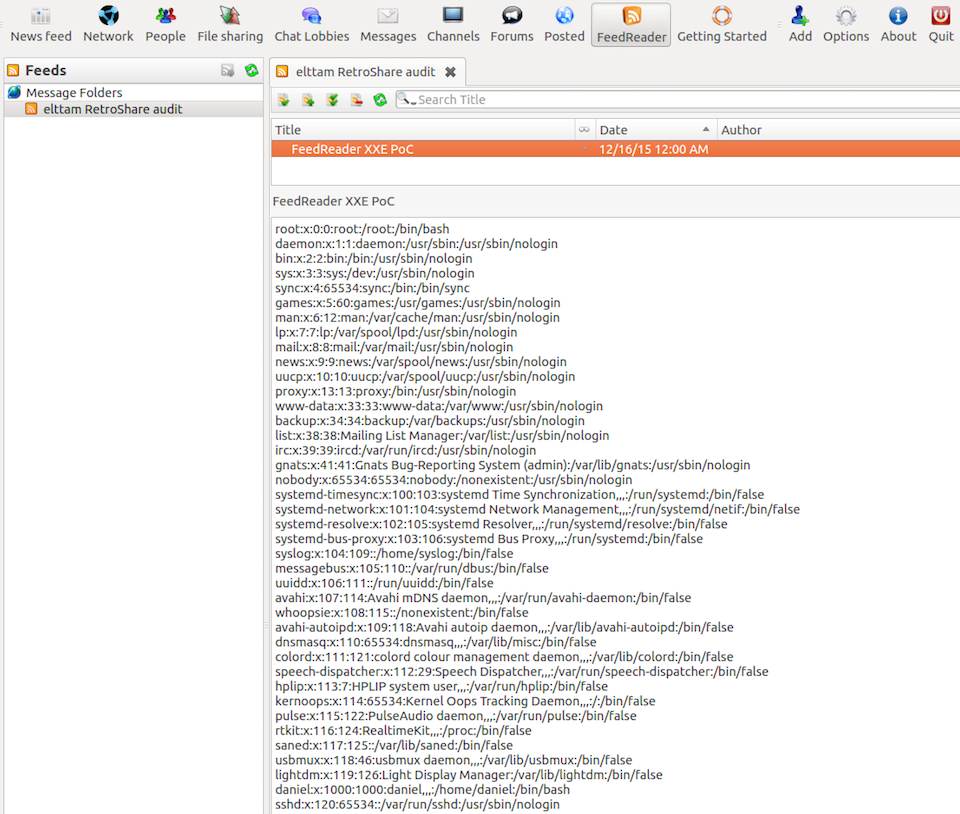

- The following screenshot demonstrates a remote XXE attack rendered by a victim, as a result of consuming a malicious RSS feed:

-

Advanced attack scenarios: While PoC’s were not developed for everything, it’s important to realise the impact of exploiting some of the issues. As an example, integer overflows resulting in out of bounds read issues exist which could potentially be exploited ala heartbleed for critical information disclosure.

-

Vendor response: After writing up the vulnerabilities and submitting to the RetroShare team, it was nice to see a fast turnaround time in e-mail correspondence and patching. A big kudos to them for their handling of such security reports.

Process Improvements

The results of the audit identified vulnerabilities that required little time investment for exploit development. We believe given the nature of the scorecard and the inherited threat model, all the applications listed need to be proactive in understanding and hardening their security posture.

So, what are some of the approaches that can achieve this? And more importantly, what are their specific considerations? Well, there’s a few options that are fairly common, including running bug bounties, performing static analysis, and having experienced manual security reviews. All of these options can occur at different times or perhaps in parallel, but it really depends on the circumstances and security maturity of the project. Let’s think about it…

-

Bug bounties: Running a bounty can be a useful exercise in promoting awareness of an application and requesting participation from the security community at large. The main benefit is rapid discovery of “low-hanging fruit”, which could be rewarded with a kudos-based bounty (#morefreebugs?).

The problem this approach can face is a failure to mitigate vulnerabilities at their root cause, and may instead lead to a dangerous find-fix-deploy cycle which fractures the security posture of the application in time. As the codebase matures, skilled adversaries may gain an advantage in auditing for complex vulnerabilities.

In our opinion if a project has a robust design where common bug classes are eradicated, it’d be reasonable to run paid bounties where the rewards are high to entice skilled resources to audit for more intricate and expensive attacks.

-

Static analysis: There are both commercial and free static analysis tools available, and each comes with its own list of pros and cons. In the case of RetroShare, the results produced by the Coverity scanner included significant noise and did not appear to be useful in guiding security fixes. It also produced false-negatives in which many vulnerabilities identified with manual auditing were not reported.

In either case, if static analysis was used correctly it could help automate simple vuln discovery while improving code quality over time.

-

Fuzzing: A common method for automating the process of bug discovery is to use fuzzing to mutate input and trigger bad states in the target application. Instrumenting the codebase with something like AFL to identify points of failure could be useful, and removes the labor required in manual auditing with full code coverage.

We believe for RetroShare this will prove useful, particularly against the parsing plugins which we only scratched the surface of.

-

Manual review: By performing a preliminary assessment, one can determine the time investment required for a more comprehensive manual code review. This type of review is useful when performed in tandem with developers, as the focus can be on strengthening the overall security posture of a project.

The main requirement is to ensure the applications architecture and interfaces are well documented to expedite a code auditors comprehension and ability to identify subtle vulnerabilities. This type of review can become counterproductive when root causes are not well understood or mitigated.

Conclusion

Our preliminary review identified vulnerabilities that show the impact security vulnerabilities can have on privacy applications. The design of such systems need to consider a multitude of attacks, and require a strong foundation on which the privacy functionality can then be built.

In the case of RetroShare, the features offered by the project combined with its ease of use make it ideal for end users looking to install an IM application with inbuilt privacy controls. It’s important to note that while the preliminary review identified several issues the security work is by no means complete. We hope that by following our guidance in the process improvements section the code quality can improve in time.

These kinds of reviews illustrate the need for those in the security community to give back to open source for the benefit of its end users. To those interested, the developers would love to see additional audits, including of the underlying crypto stack and implementation code.

We will be continuing with some follow-up work with RetroShare and then also move onto other popular clients, submitting bug reports and providing guidance where possible. In more formalized reviews, it would be important to consider where the greatest risk of attack is and focus efforts accordingly; for example:

-

Is the threat actor a malicious friend? (largest attack surface)

-

Is the threat actor a malicious server inside the network? (news feeds, file sharing, etc)

-

Is the threat actor somebody who is outside the trusted network? (attacks at the protocol layer)

In the case of the EFF scorecard, we believe it’s a step in the right direction but it’s also critical to mature such guidance beyond what is currently written. The following are some inclusions that can make the scorecard more transparent and reflective of actual privacy and security concerns:

-

Include reference materials to supporting evidence used in creating the matrix; e.g. “Is it encrypted?” may point to relevant default configuration information or source code.

-

Include additional details around the scope, regularity, and completeness of source code reviews - and indications of improved code quality over time.

-

Investigate potential ways to expand the matrix to factor in more security-focused criteria, some possibilities being:

-

Hardening protections: how is the application hardened, e.g. binary protections and security policies to factor in defense-in-depth.

-

Attack surface: how is the application minimising and managing its attack surface, including external dependencies.

-

Security assurance: details for how the application is performing security assurance activities with linked evidences.

-

We hope this post assists those interested in privacy to understand various security considerations that are important. A special thanks to Cyril from the RetroShare team for the quick responses and time spent contributing to open source, and to the EFF for their work dedicated to users online digital rights.

For those interested in seeing the original vulnerability report, specific details of the findings can be found here: RetroShare Advisory 1 - elttam.pdf.

There will be more findings reported and patched soon - please follow @elttam if interested in tracking this and other research and news from our team.